For IT services and SaaS companies, the AI talent gap is no longer a future concern. It is already shaping product roadmaps, delivery margins, and competitive positioning.

Clients now expect AI-enabled platforms. SaaS users expect intelligent automation, predictions, and recommendations by default. Yet many technology leaders are discovering the same constraint: demand for AI capabilities is accelerating faster than their ability to build or hire for them.

The companies pulling ahead are not those hiring the most AI engineers. They are the ones redesigning how AI capability is accessed, scaled, and operationalized.

Why the AI Talent Gap Hits These Companies Harder

According to a research from Bain & Company, AI talent demand continues to grow 21% annually even as in-house expertise availability lags, slowing adoption for nearly half of executives.

Unlike traditional enterprises, IT services and SaaS firms face a double pressure.

First, AI is part of the product or service itself, not just an internal efficiency tool. Models must be production-grade, reliable, and continuously improved. Second, AI work must be delivered at scale, often across multiple clients or thousands of users, without eroding margins or slowing release cycles.

For many Midwest-based tech companies, including those in Minnesota, this challenge is amplified. While there is strong engineering talent, senior AI specialists, particularly those with experience in MLOps, data engineering, and scalable architectures, remain scarce and expensive. Hiring alone is slow, risky, and difficult to justify when AI demand fluctuates by roadmap phase or client pipeline.

As a result, leading companies are shifting away from a “hire-first” mindset toward more flexible AI operating models.

How Leading IT Services & SaaS Companies Are Solving It

Distributed AI Product Teams for Faster SaaS Innovation

Many B2B SaaS companies are embedding AI directly into their core platforms: automated workflows, predictive insights, personalization, and intelligent recommendations. Rather than building large in-house AI teams, they are keeping product ownership and customer insight internal while distributing AI execution globally.

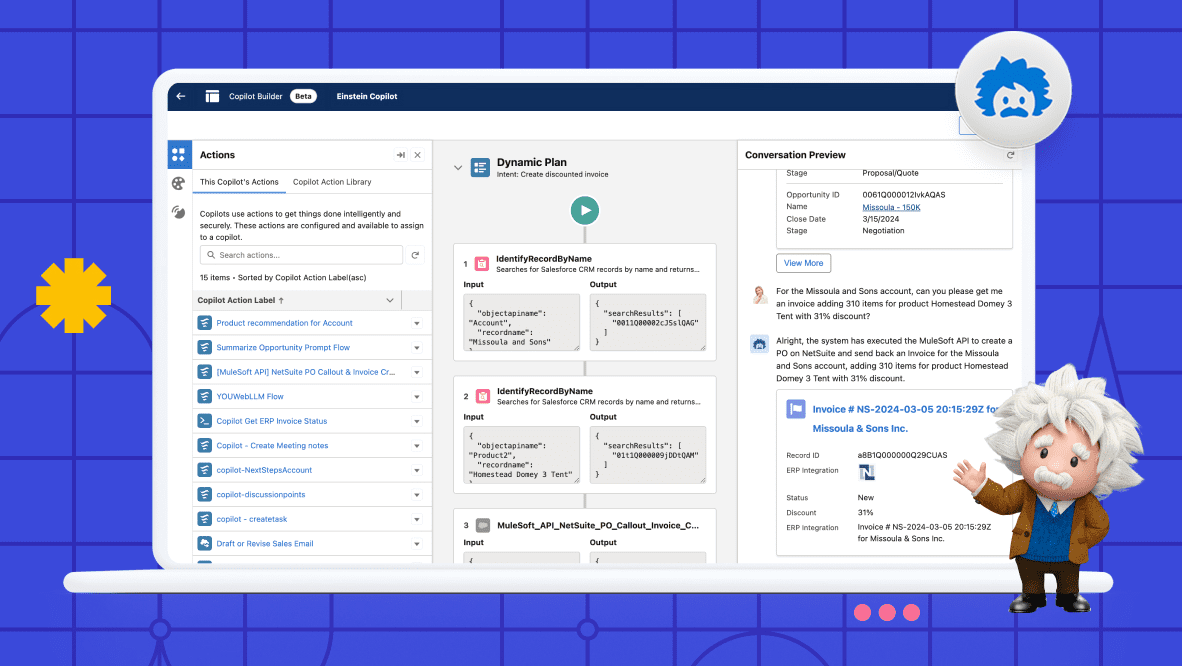

For example. Salesforce embeds AI (Einstein) directly into its products: Sales Cloud, Service Cloud, and Marketing Cloud. Salesforce’s AI strategy works not because it built the largest AI team in the room, but because it structured AI as a product capability. Product ownership, customer insight, and roadmap decisions remain tightly held by internal teams. AI execution, however, is distributed across global engineering units and specialist partners, allowing features to ship faster and evolve continuously across the platform.

For companies looking to adopt a similar approach, the challenge is rarely vision. It is execution: How to extend AI capability without diluting product ownership or overwhelming internal teams.

This is where delivery partners like CMC Global typically operate: not as a substitute for product leadership, but as an extension of the execution layer. By embedding AI engineers, data specialists, and platform expertise into distributed product teams, organizations can scale AI features while keeping strategic control and customer context firmly in-house.

AI-Enhanced Delivery Models in IT Services Firms

Forward-thinking IT services providers are taking a similar approach, but at the delivery model level.

Instead of staffing AI expertise per project, they are building shared AI capabilities that support multiple client engagements. AI specialists, data engineers, and automation experts are pooled and reused, often supported by offshore or nearshore teams that can scale elastically.

Large technology providers have used this approach for years. Even global firms like Microsoft and IBM are known to extend parts of their AI and machine learning work to distributed engineering teams worldwide, particularly for data preparation, model training, and analytics-intensive workloads. The objective is not cost alone, but access to scarce skills at scale.

For mid-sized IT services firms, this model improves margins while making AI services more repeatable and easier to industrialize.

MLOps as a Competitive Advantage for SaaS Scale

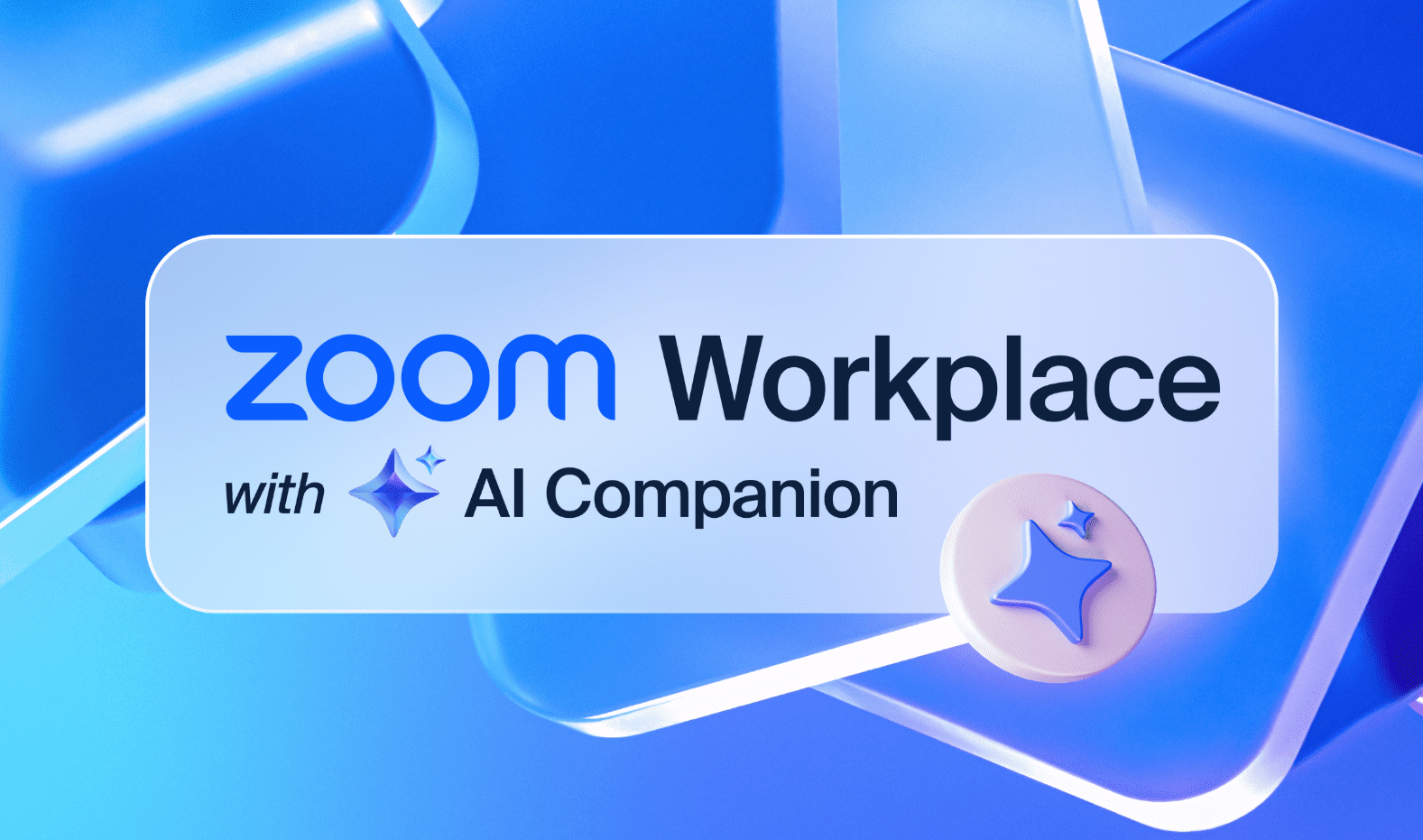

Zoom’s AI features, such as meeting summaries, noise suppression, and real-time transcription, operate in performance-sensitive, latency-critical environments. This forced Zoom to treat AI operations as a core engineering discipline rather than an add-on capability.

Zoom invested in MLOps practices that emphasize continuous monitoring, performance validation, and secure deployment across regions. Models are retrained and tuned without interrupting service quality, even as usage spikes unpredictably.

For SaaS companies pursuing similar scale, this often requires external platform engineering teams that specialize in building and operating AI pipelines while internal teams stay focused on customer experience and roadmap delivery.

This is the role organizations like CMC Global increasingly play: helping SaaS and digital platforms establish the engineering backbone, from CI/CD for models to monitoring and governance, so AI remains a reliable business capability, not a fragile experiment.

The Vietnam Factor: A Strategic Hub in the Global AI Landscape

As companies redesign their operating models for AI, geography becomes a strategic consideration. Beyond traditional offshore locations, Vietnam has emerged as a pivotal hub in the global IT landscape, particularly for companies building scalable, cost-effective AI execution capacity.

Vietnam is not just a source of raw engineering talent; it has matured into a destination for high-value AI and software product development. With a government aggressively pushing digital transformation and a robust pipeline of STEM graduates, over 50,000 annually, the country offers a compelling blend:

- Deep-Tech Talent Pool: A strong foundation in mathematics and core computer science is fueling specialization in machine learning, data engineering, and MLOps. Major global tech firms have established AI R&D centers in Hanoi and Ho Chi Minh City, further raising the bar and creating a vibrant ecosystem.

- Product Development Mindset: Vietnamese IT firms and engineers have evolved from staff augmentation to true product development partners. This aligns perfectly with the needs of SaaS companies and IT services firms looking for teams that can own the execution of AI features within a defined product roadmap.

- Scalable & Sustainable Economics: For IT services companies, integrating Vietnamese teams into a shared AI capability pool offers a sustainable margin structure. For SaaS companies, it provides a scalable extension of the product team without the exponential cost curve of Silicon Valley or other heated markets.

The lesson for tech leaders is clear: part of designing a resilient AI operating model is strategically mapping talent ecosystems worldwide. Vietnam represents a proven, high-maturity option for building the scalable execution layer that turns AI strategy into reliable, production-grade features and services.

AI Advantage Is Built, Not Hired

The most successful IT services and SaaS companies are not “winning” the AI hiring war. They are redesigning their operating models to ensure consistent access to AI capability – globally, reliably, and at scale.

For C-level leaders, the question is no longer whether AI talent is scarce. It is whether your organization is structured to access and deploy that talent effectively.

For many IT services and SaaS leaders, the next phase of AI maturity is not about hiring more specialists, but about designing an operating model that scales. CMC Global works alongside technology executives to help shape these models, combining global AI execution with strong product and delivery ownership.