As today’s users can get access to the Internet anywhere and anytime, businesses receive hundreds and even up to thousands of user requests on their websites and applications every minute. This booming demand places substantial pressure on servers to keep up to serve users with high-quality content and media in a fast and reliable manner. Any downtime or interruption can result in poor user experience and lost revenues.

Load balancers have become an effective tool for service providers to handle this record-high level of application usage. What they do is spread traffic load across servers to avoid the incident of a single server being overloaded. So what are load balancers and how do they regulate incoming traffic to servers? Let’s find out in this article!

What is a Load Balancer?

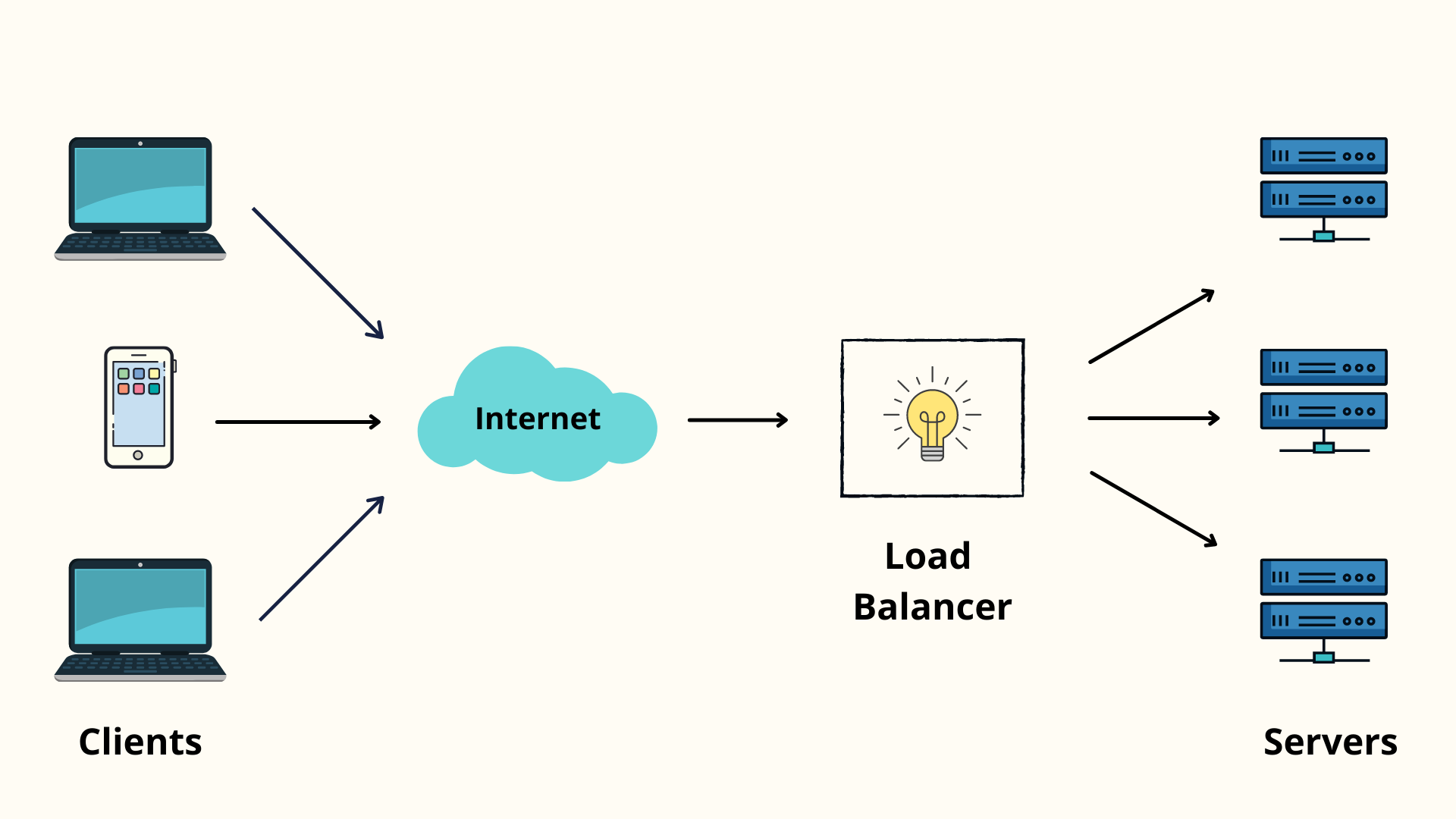

A load balancer is a physical device or software process in a server network that analyzes incoming user requests and spreads them across servers to make sure that no server is overloaded. Load balancers act as the traffic cop standing between client devices and backend infrastructure to route user requests in a manner that optimizes speed, stability, and capacity utilization. When a server is down or reaches a traffic load ceiling, load balancers send new traffic to other servers in the network that are still online or have spare capacity.

In this manner, load balancers perform the following functions:

- Balance out user requests across servers.

- Prevent incoming traffic from going to down servers.

- Scale up or down servers based on traffic load.

How does Load Balancing work?

Load balancers use a predefined pattern, often known as a load balancing algorithm or method, to regulate incoming traffic. Such an algorithm ensures that no single server has to handle more traffic than it is able to.

There are different load-balancing algorithms that manage the process in different ways, so you’ll need to decide how you need to balance your load in order to choose the right algorithm.

Here are how a load balancer basically works:

- A user sends a request on an application, and the application sends the request to the server.

- A load balancer receives the request and then routes that request to a certain server in the server farm by the preset pattern of the algorithm in use.

- The server receives the request and responds to the user via the load balancer.

- The load balancer receives the response and sends it to the user.

Why are Load Balancers important?

Massive traffic loads can overwhelm any single server, which can then cause disruptions in user experience and business processes. Load balancers are important because their relegating of incoming traffic helps ensure service availability during traffic spikes.

Load balancers also play a key role in scalability in cloud computing. As cloud users can scale up and down servers to accommodate their fluctuating computing needs, load balancers are what regulate traffic in these instances. Without load balancing, newly added servers wouldn’t receive incoming traffic in a coordinated manner or at all while existing servers became overloaded.

Load balancing algorithms can even identify if a server (or a set of servers) is likely to get overloaded to redirect traffic to other nodes that are deemed healthier. Such proactivity can minimize the chance of your application becoming unavailable.

Hardware vs. Software Load Balancing

There are two types of load balancers: hardware and software.

Hardware Load Balancers

Hardware load balancers are pieces of physical hardware that direct traffic to servers based on criteria such as the number of existing connections to a server, server performance, and processor utilization.

Hardware load balancers require maintenance and updates as new versions and security patches are released. They typically offer better performance and control and a more comprehensive range of features such as Kerberos authentication and SSL hardware acceleration, but they in return require certain levels of proficiency in load balancer management and maintenance.

Furthermore, hardware load balancers are less flexible and scalable due to their hardware-based nature, and there’s a tendency for businesses to over-provision hardware load balancers.

Software Load Balancers

Software load balancers are often easier to deploy than their hardware counterparts and also more flexible and cost-effective. Software load balancers give you the option to configure the load balancing to your application’s specific needs, but this comes at a cost which is more work required to set up your load balancer.

Software load balancers are available either as ready-to-install solutions that require configuration and management or as a cloud server (Load Balancer as a Service – LBaaS). The latter will spare you from routine tasks including maintenance, management, and upgrading, which are handled by the service provider.

Software load balancers are more popular in software development environments because developers often need to make specific changes in the configuration of load balancers to achieve peak performance for an application. This necessity will face a big inconvenience with hardware load balancers as developers will have to constantly rely on the infrastructure team.

Different Types of Load Balancing Algorithms

A load balancing algorithm is the logic, a set of predefined rules, that a load balancer uses to route traffic among servers.

There are two primary approaches to load balancing. Dynamic load balancing uses algorithms that distribute traffic based on the current state of each server. Static load balancing distributes traffic without taking this state into consideration; some static algorithms route an equal amount of traffic, either in a specified order or at random, to each server in a group.

Dynamic Load Balancing Algorithms

- Least connection. The least connection algorithm identifies which servers currently have the fewest number of requests being served and directs traffic to those servers. This is based on an assumption that all connections require roughly equal processing power.

- Weighted least connection. This one gives administrators the option to assign different weights to each server under the assumption that some servers can handle more requests than others.

- Weighted response time. This algorithm averages out the response time of each server and combines that with the number of requests each server is serving to determine where to send traffic. This algorithm can ensure faster service for users by sending traffic to the servers with the quickest response time.

- Resource-based. The Resource-based algorithm distributes traffic based on what resources each server currently has available. Specialized software (called an “agent”) running on each server measures their available CPU and memory, and then the load balancer queries an agent before sending traffic to its corresponding server.

Static Load Balancing Algorithms

- Round robin. Round-robin network load balancing rotates user requests across servers in a cyclical manner. As a simplified example, let’s assume that an enterprise has a group of three servers: Server A, Server B, and Server C. In the order that Round Robin regulates requests the first request is sent to Server A, the second request goes to Server B, and the third request is sent to Server C. The load balancer continues to route incoming traffic by this order. This ensures that the traffic load is distributed evenly across servers.

- Weighted round robin. Weighted Round Robin is developed upon the Round Robin load balancing method. In weighted Round Robin, each server in the farm is assigned a fixed numerical weighting by the network administrator. Servers deemed as able to handle more traffic will receive a higher weight. Weighting can be configured within DNS records.

- IP hash. IP hash load balancing combines the source and destination IP addresses of incoming traffic and uses a mathematical function to convert them into hashes. Connections are assigned to specific servers based on their corresponding hashes. This algorithm is particularly useful when a dropped connection needs to be returned to the same server that originally handled it.

Final Words

We hope that this article has provided you with valuable knowledge about load balancers in cloud computing. No organizations want to deal with server overload, and load balancers are an effective tool that can help them avoid this problem.

If your company doesn’t have the expertise to execute your cloud migration project in-house, it’s best to find a good cloud migration service provider to help you.

CMC Global is among the top three cloud migration service providers in Vietnam. We operate a large certified team of cloud engineers – specializing in Amazon AWS, Microsoft Azure, and Google Cloud – who are able to migrate your legacy assets to the cloud in the most cost-effective way and in the least amount of time.

For further information, fill out the form below, and our technical team will get in touch shortly to advise!